19 February, 2025

Scaling in the Cloud

The cloud is said to be infinite. Major cloud providers promise to provision thousands of servers in a moment. Yet, behind the veils of advertisement, clouds are still bound by physical constraints. Nevertheless, cloud users must realize that scaling "up" or adding more resources must be justified and necessary, because it directly translates into higher expenses. Scaling is not restrained only to changing capacity based on end-user demand. It is also an important topic in System Design discussions.

More hardware can do more work only if the software can make use of it. In other words, if the software is not designed to "scale", then adding hardware will not result in improved performance. Yet, for brevity, we shall only discuss about how some common hardware components can be scaled cost effectively, as this falls under the responsibility of the IE teams.

Before jumping into the details of scaling, remember this golden rule: "More hardware will not always solve the problem, and at best, will solve it only temporarily."

-

Maintain low latency: A slow website adversely impacts customer experience. Low latency is usually one of the KPIs of the Infra Engineering team.

-

Low scaling time: Traffic to your website can spike unexpectedly and by multiple times. Hence your orchestration layer should be able to quickly scale up and down, in time, according to changing demand.

-

Cost-effective: Scaling strategies like scheduled scaling and dynamic scaling ensure resources are provisioned only if there is high traffic.

-

Automatic: There must exist an orchestration layer that adds or removes capacity as and when required, without continual manual involvement of the IE team.

-

Intelligent: Machine Learning can be well applied to scale resources intelligently. This means that the system can proactively scale resources based on historical data. For example, if the ML model anticipates changes in traffic during a certain time of day. An accurately trained ML model would be fully automatic as compared to scheduled scaling wherein a person configures the scale magnitude, time at which to scale and duration.

Scaling strategies are highly dependent on the nature of the underlying application:

-

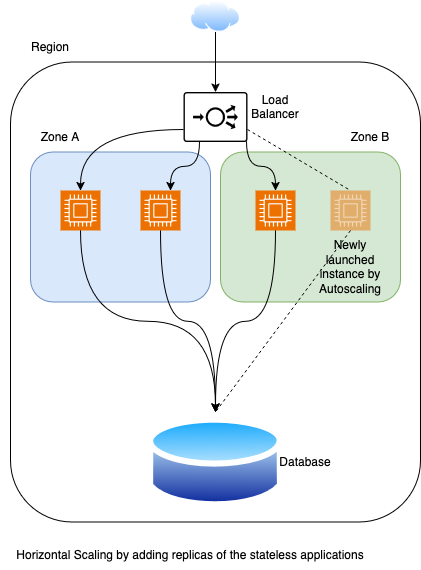

Stateless Applications: Stateless applications can be horizontally scaled, by adding more replicas of the application servers. In AWS, Autoscaling is a service that scales some core components like EC2 and RDS based on Cloudwatch metrics. EC2 Autoscaling is a service which modifies capacity in Autoscaling Groups based on metrics.

-

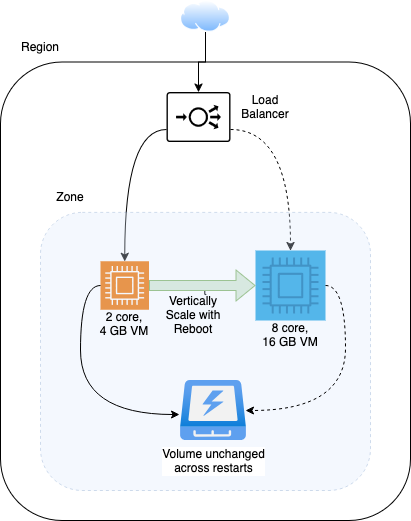

Stateful Applications: Stateful applications can be scaled only vertically. This means that the underlying hardware has to be upgraded to a higher configuration to support more computational work. A change in configuration almost always results in a planned downtime.

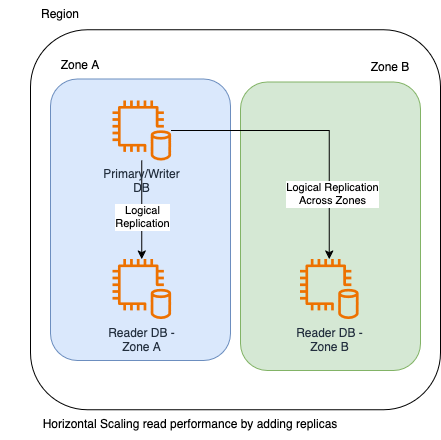

Databases are commonly used stateful applications. Most databases use parallelism internally by using multiple threads which run on separate cores to process data faster.

Use of Read Replicas: Some Stateful applications can expand their read capacity horizontally. In this model, one instance in the cluster accepts writes (data changes) while the reader instance accepts only reads. As this model inherently suffers from data hazards like Write After Read (WaR), Read After Write (RaW) and Write After Write (WaW), applications must take precautions to safe gaurd against them. -

Distributed systems: Distributed systems like Cassandra and MySQL NDB Cluster support horizontal scaling of their writer instances. Thus, it is possible to increase the combined capacity (write and read) capacity more linearly in these clusters than in a Primary-Replica model, when new nodes join the cluster.

-

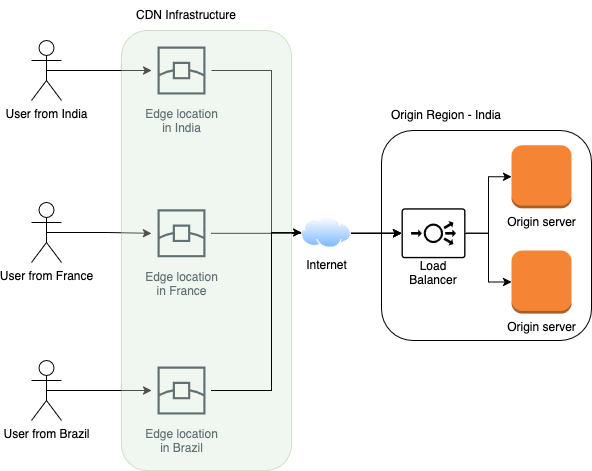

Static files: Static files, or static assets, are files which are not user specific. They can be of any content type, and usually do not require a web application to be served. Examples of common static files include html, images, JavaScripts, CSS files, fonts, audio and video. One characteristic of static files that draws special attention is their frequency of being downloaded. Hence, if many static files are loaded on a page, the page load time increases, which can adversely affect user experience and SEO rankings. To optimize loading of static files, the simplest strategy is to use caching.

If you choose to host the HTTP caching layer (self hosted CDN) yourself, OSS software like Nginx, Squid Cache and Varnish can be used. For organizations looking to use a SaaS caching solution, a commercial CDN is the answer. Commercial CDNs use proprietary technology to cache content at their Points of Presence (PoPs) or Edge Locations to avoid a round trip to the origin server (you).

Stateless applications do not store any dynamic data in their local storage. Hence, every replica of the application server can be considered identical. Therefore, requests can be served by any of these replicas.

In case there are a fixed number of application servers, then deployment can be done on them in batches. While deploying on a server, the IE team must place it in maintenance mode and stop live traffic from reaching it. An alternative in cloud environments is to use disk snapshots of a base server to create replicas. Disk snapshots carry the risk of data inconsistencies if not done correctly.

Vertical scaling is required in two scenarios. One, if the application is stateful and stores data in its local disk. Two, if the application is stateless and horizontally scaled but each scaling unit requires more resources to run correctly.

The below factors decide the appropriate choice of VM:

-

Type of the application: Databases, Caches, Web applications and Java applications typically require memory-intensive VMs, with a 4:1 memory to vCPU ratio or higher. HPC applications require compute intensive VMs, with a 2:1 memory to vCPU ratio. Batch processing, big data and data lakes require high storage throughput VMs. NAT gateways, VPN endpoints, load balancers and proxies require network-optimized VMs. Furthermore, graphics intensive and Artificial Intelligence (AI) applications can benefit from GPU instances.

-

Resource usage trends: Vertical scaling must be performed on a VM once the resource usage crosses a threshold. Typically, this threshold is 80% of the VM's resources.

-

Available capacity: The choice of a new VM size also depends on the available capacity with the underlying infrastructure provider. The data centre or cloud provider should have enough capacity available to provision the requested VM.

-

Available budgets: The choice of the new VM size must comply with the allocated budget.

Scaling is one benefit of replication, apart from data redundancy. It is possible to horizontally scale reader capacity by placing a load balancer before the replicas.

CDNs have geographically spread caching infrastructure, with hundreds of Points of Presence (PoPs).[1][2][3] Based on the configuration at the CDN and the Content-Type header, the CDN provider caches static content physically closest to the end user.

Caching content at the CDN reduces traffic at the origin, thus decreasing allocated resources and hence expenses. CDNs have very high throughput capacity, about hundreds of Tbps. Thus, they typically also can defend your site against DDoS attacks.

-

https://www.cloudflare.com/en-in/network/

-

https://www.fastly.com/network-map

-

https://www.akamai.com/why-akamai/global-infrastructure

Recent blogs

INFRA ENGINEERING 31 January 2025

Availability Engineering

Explore the various mechanisms to ensure high availability of your site on the cloud.

Read More

INFRA ENGINEERING 21 January 2025

Basics of Website Hosting

Explore what goes into hosting a website on the cloud, which is highly available and secure.

Read More...

SECURITY ENGINEERING 21 January 2025

Post Quantum Cryptography

Read about the current developments in Post Quantum Cryptography (PQC).

Read More...